This workshop deals with estimating linear curves using PROC REG and PROC GLIMMIX. I will be using examples from Bowley, S.R. 2015. A Hitchhiker’s Guide to Statistics in Biology: Generalized Linear Mixed Model Edition. Plants et al. Inc., Guelph, Ontario.

Linear Regression

It’s funny how many people I talk with that remember the following phrase:

Y = mX + b

Our math teachers in high school did a great job with this equation, since most of us remember it. We also tend to remember what the different parts mean:

m = slope or rise/run

b = Y-intercept or where our line crosses the Y-axis

As soon as we say Y=mX + b, the anxiety that accompanies statistics seems to disappear. Of course! we can do this! But, how in the world do we accomplish this in SAS. No worries, it is fairly straightforward, as long as you remember your goal of Y = mX + b.

We will be using Example 5.3 to demonstrate a Simple Linear Regression. As statistical procedures mature and new SAS PROCs are developed, it turns out that you can run this analysis in several PROCs. However, we will concentrate on 2: PROC REG and PROC GLIMMIX.

Download SAS coding for Example 5.3 here.

PROC REG

Proc reg data=linear_reg;

model absorb = glucose;

Run;

Quit;

Proc reg data=linear_reg; Calls the PROCedure REG and using the linear_reg dataset

model absorb = glucose; This is our Y = mX + b – we only have the Y and X in our dataset – absorb = Y and glucose = X. e want SAS to estimate m and b

Run;

Quit; <- We need to add a Quit for PROC REG, it will keep running in the background until we give it more instructions. So let’s finish it off by adding the quit. It gives us results (if successful) regardless.

To review the output in PDF form, please download here.

From our output our simple linear regression equation is:

Y = 0.00073*X + 0.0047 or absorb = 0.00073 * glucose + 0.0047

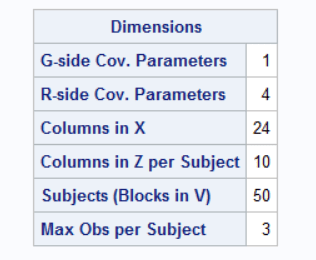

PROC GLIMMIX

Let’s restart our linear regression estimation by using PROC GLIMMIX and using the problem model to account for our RCBD design. Remember that in SAS we need 2 statements to run a proper mixed model: MODEL for our fixed effects and RANDOM for our random effects. To run this please use the following coding:

Proc glimmix data=linear_reg;

class block;

model absorb = glucose / solution;

random block / solution;

covtest “block=0” 0 .;

Run;

Just to restate: take notice that we have a random effect of Block? With PROC REG we cannot account for any random effects that may be in our design. This is one of the benefits of using PROC GLIMMIX, you can do a much better job at modelling your data correctly.

Proc glimmix data=linear_reg; Calls the PROC GLIMMIX and specifies the linear_reg dataset

class block; Lets SAS know that we have a classification or grouping variable: BLOCK

model absorb = glucose / solution; Here is our Y = mX + b. In order to obtain the estimates for our slope and our Y-intercept, we need to add the option SOLUTION after our model statement.

random block / solution; Our design had a random BLOCK, to better represent our design we are adding the random effect of BLOCK. By adding the option SOLUTION we will obtain an estimate for the BLOCK effect

covtest “block=0” 0 .; Of course, we want to know whether the BLOCK effect is significantly different from 0, so we will add the COVTEST statement here

Run;

To review the SAS output, download here (PDF).

Comparing Regression Response

Now let’s take a look at another example where we may have several treatments being applied at the same time. Let’s be honest, it is not very often where we will only have one outcome measure with one predictor. Let’s use Example 6.4 from S.R. Bowley’s text. The experiment in this example looks at the yield of 3 varieties of alfalfa seeded at 4 different rates: 6, 12, 18, and 24 kg/ha). We are interested in seeing if there are differences between the varieties and the seeding rates (factorial design of treatments since all the combinations exist). The trial was conducted in a 3 replicate RCBD experimental design.

The statistical model would be:

Where:

block = RANDOM effect

variety = FIXED effect

rate = FIXED effect

variety x rate = FIXED effect

Translates directly to SAS as:

model yield = variety | rate;

random block;

There are 3 steps to conducting this analysis:

Step 1 Variance Analysis and Means

We want to get an overall picture as to how the variation in our yield outcome measure is partitioned among the parts of our model.

Step 2 Regression partitions

Why are we doing regression if we want to know if there are variety and rate differences in yield? Didn’t we just answer that in Step 1? YES we did, but because one of our factors – RATE – is a quantitative measure – rather than simply saying there are differences at rate x for variety y – let’s go further and look at the trends or regressions across the rates for each variety. This gives us more information about our treatments.

To do this we are going to take our first model and break it up into different components to run the regression part. First though we need to create a new variable that takes our rates (6, 12, 18, and 24) and looks at them as quantitative measures and not as categorical measures as we did with in Step 1. When the variable is listed in the CLASS statement of our analysis, we are telling SAS to treat that variable as a categorical variable – with the groups labelled as 6, 12, 18, and 24. By doing this SAS treats it as 4 groups – it does not recognize the relationships between them. So, we create a new variable, let’s call it “x” that contains the same contents as our variable “rate”. But now we will NOT list it in the CLASS statement, so now SAS sees the variable “x” and it has the values of 6, 12, 18, and 24 – but now it sees the values with 6 units in between each. We will use this new variable in the creation of our regression equations.

Step 1 model ————— Step 2 model

Variety Variety

Rate x = linear component of Rate

x*x = quadratic component of Rate

x*rate = lack of fit° component of Rate

Variety * Rate x*variety = linear component of Rate for each variety

x*x*variety = quadratic component of Rate for each variety

x*rate*variety = lack of fit° component of Rate for each variety

° Lack of fit can be interpreted as all other fits beyond the quadratic. We can easily describe a linear and a quadratic relationship, but cubic, quartic, etc… can get complicated – so we use the term “lack of fit” to include all curve fits beyond the quadratic. Lack of fit to the linear and quadratic.

You will use the results of Step 2 analysis to decide what kind of relationship exists between our levels of Rate: linear, quadratic, or lack of fit.

Step 3 Regression Coefficients

Now that we know what type of relationship we have between our levels of Rate, now we need to run the analysis for a 3rd time to obtain the regression coefficients. A couple of notes to consider when running this part of the analysis:

- Parms statement – since we already know the model that should be run, with this statement we provide SAS with the estimates of our covariance parameters (random effects) so there are no iterations required to resolve the correct model.

- NOINT option in the model statement. NOINT is telling SAS that we do not want a y-intercept for the entire model. In other words, we are telling SAS not to force our 3 regression equations to pass through the same intercept.

Step 4 – can be combined with Step 3 – Regression Comparisons

Now that we have our regression equations, the big question on our minds is: Are they the same or different?

To answer this question, we need to create a series of ESTIMATE and CONTRAST statement to compare each regression coefficient.

Don’t forget you can also use these equations to provide you with a visualization of your varieties and rates!